A study is being conducted at the University of Surrey, which investigates, on the one hand, the optimisation of the software interface used in remote simultaneous interpreting, and, on the other hand, the use of automatic speech recognition in remote simultaneous interpreting. The overall goal of the study is to explore how interpreters can be supported in their performance of remote simultaneous interpreting assignments.

Over the past few years, remote simultaneous interpreting (RSI), which was traditionally performed from an interpreting booth located in a conference venue or in an interpreting hub, has experienced an unprecedented expansion and has been transformed by technology.

Indeed, initial RSI solutions, which date back to the 1970s, were booth-based, allowing interpreters to work in teams, from physical interpreting booths with traditional consoles.

For the very first time, SIDPs allow conference interpreters to work from home, or in an office, and to be physically separated from their booth partners.

More recently, however, a new generation of cloud-based simultaneous interpreting delivery platforms (SIDPs) has emerged, and these aim at virtually recreating the interpreter’s traditional console and work environment (Braun, 2019). SIDPs have had a significant impact on the interpreters’ lives and working conditions. For instance, for the very first time, SIDPs allow conference interpreters to work from home, or in an office, and to be physically separated from their booth partners.

As one would imagine, this shift towards cloud-based environments and into the interpreters’ home/office, which was likely accelerated by the Covid-19 pandemic, raises new challenges and questions.

The limited visual input available in RSI, where the interpreters see the speakers and audiences only on a screen, constitutes a particular problem.

Previous research on booth-based RSI has found that this modality of interpreting is more tiring and perceived as being more stressful than conventional simultaneous interpreting (e.g., Moser-Mercer, 2003), and that the limited visual input available in RSI, where the interpreters see the speakers and audiences only on a screen, constitutes a particular problem (e.g., Mouzourakis, 2006). Pointing in a similar direction, a recent survey of conference interpreters, who at that time of responding mostly performed cloud-based RSI assignments, shows that 83% of respondents consider RSI more difficult than on-site interpreting, 50% believe that their average performance is worse under RSI conditions and 67% think that working conditions are worse in RSI (Buján and Collard, 2021).

Whilst previous RSI research has focused on identifying problems and their sources, one question that has become pressing in the shift towards online working is how problems can be mitigated and how interpreters can be supported in their performance of RSI assignments.

Initial explorations of the cloud-based solutions suggest that there is room for improving the visual interfaces of many widely used SIDPs.

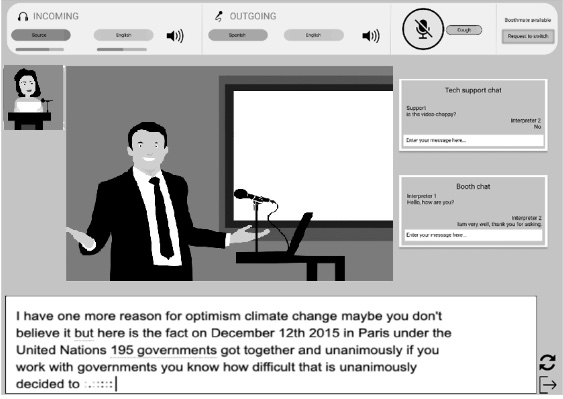

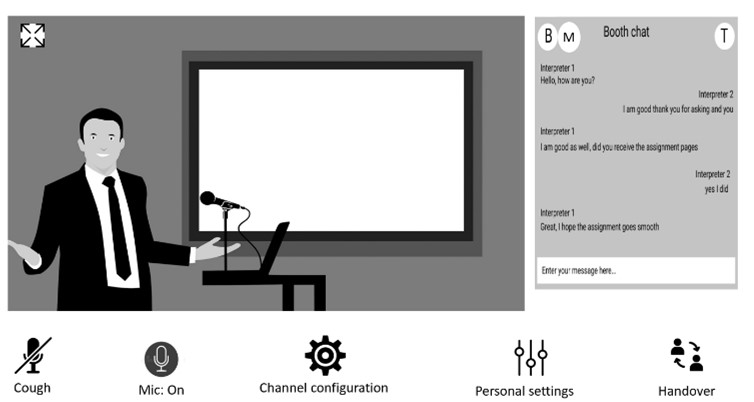

Initial explorations of the cloud-based solutions suggest that there is room for improving the visual interfaces of many widely used SIDPs (Collard & Buján, 2021; DG SCIC, 2019). Effective and user-friendly software interfaces are likely to be instrumental for cloud-based RSI. As they add a new dimension not only to simultaneous interpreting but also to RSI, gaining a better understanding of their role in the interpreting process is a crucial task for new RSI research.

Moreover, initial investigations of the use of automatic speech recognition (ASR) as a supporting tool in computer-assisted interpreting (CAI) have been conducted in relation to both traditional conference interpreting settings (Fantinuoli, 2017; Desmet et al., 2018; Cheung and Tianyun, 2018) and, to a lesser extent, RSI (Fantinuoli et al., 2022; Rodriguez et al., 2021). Those studies have focused on the potential of ASR to support interpreters when dealing with specific problem triggers in simultaneous interpreting such as technical terminology, accents and numbers (Mankauskienė, 2016). The studies suggest that this use of ASR in traditional conference interpreting settings is promising (Desmet et al., 2018; Cheung and Tianyun, 2018). However, very little is known about other uses of ASR in booth-based simultaneous interpreting and about the integration of ASR in RSI.

The study’s overarching aim is to optimise the interpreters’ working conditions and performance in cloud-based RSI, and to improve their user experience.

With that in mind, the study we are currently conducting at the University of Surrey, which comprises two PhD projects, focuses on two aspects of support, namely (a) the optimisation of the RSI interface and (b) the integration of ASR in an RSI workflow and an RSI interface to aid source text comprehension.

The study’s overarching aim is to optimise the interpreters’ working conditions and performance in cloud-based RSI, and to improve their user experience.

Our main research questions are:

- To what extent can ASR and an improved RSI interface support the interpreters and what impact does this have on their interpreting quality and overall user experience?

- What is the most effective way of presenting an ASR generated transcript and other visual information to the interpreters?

The focus groups’ discussions helped us with the detailed planning of the research design and the refinement of the variables to be tested.

To address these questions, we ran two focus groups with a total of seven interpreters with different professional and academic backgrounds, and designed an online experiment.

The focus groups’ discussions helped us with the detailed planning of the research design and the refinement of the variables to be tested, and included the following five points:

- introductions and overview of RSI;

- pros and cons of RSI;

- analysis of current interfaces and participants’ design ideas;

- presentation of a research-informed interface and participant reviews of the interface.

- wrap-up and conclusions.

Based on the findings from the focus groups, and the review of RSI and user experience research literature, we designed an experimental study, which aims to collect, on the one hand, video recordings from professional interpreters performing RSI assignments under different conditions, and on the other hand, quantitative data from questionnaires.

The experiment, which is conducted remotely and does not need any supervision, has two parts consisting of two 25-minute interpreting exercises, with a break in between, and four short questionnaires. The first part focuses on the quality of the interpreter’s output and experience under two conditions, i.e. when an ASR-generated transcript is available as an additional input source, and when this is not the case. The second part investigates the effect of the type of software interface, comparing a more minimal interface with the more maximal conventional SIDP interface, and the effect of the interpreter’s view of the speaker (a close-up view and a gesture view) on interpreting performance and user experience.

We will present the analysis of the ASR as an additional linguistic and visual input source.

Once completed, the study will report on insights gathered during the focus groups and the experimental phase. On the one hand, we will present the analysis of the ASR as an additional linguistic and visual input source and its impact on the interpreters’ output and user experience. On the other hand, we will report on observations and statistical analysis performed on the questionnaires as well as key relationships found between the individual participant profiles and their user experience ratings with regards to the different interfaces and speaker’s views tested.

Bibliography

Braun, S.: Technology and interpreting: In: O’Hagan, M. (ed.) Routledge Handbook of Translation and Technology. Routledge, London (2019)

Buján, M., Collard, C.: ESIT research project on remote simultaneous interpreting (2021)

Cheung, A. K. F., & Tianyun, L. (2018). Automatic speech recognition in simultaneous interpreting: A new approach to computer-aided interpreting. In Ewha Research Institute for Translation Studies International Conference 2018.

Desmet, B., Vandierendonck, M., Defrancq, B.: Simultaneous interpretation of numbers and the impact of technological support. In: Fantinuoli, C. (ed.) Interpreting and Technology, pp. 13–27. Language Science Press, Berlin (2018)

DG SCIC : Interpreting Platforms. Consolidated test results and analysis. European Commission’s Directorate General for Interpretation (DG SCIC), 17 July 2019

Fantinuoli, C.: Speech recognition in the interpreter workstation. In: Proceedings of the Translating and the Computer 39 Conference, pp. 367–377. Editions Tradulex, London (2017)

Fantinuoli, C., Marchesini, G., Landan, D., Horak, L.: KUDO Interpreter Assist: Automated Real-Time Support for Remote Interpretation (2022). http://arxiv.org/abs/2201.01800

Moser-Mercer, B.: Remote interpreting: assessment of human factors and performance parameters. Communicate! Summer 2003 (2003)

Mouzourakis, P.: Remote interpreting: a technical perspective on recent experiments. Interpreting 8(1), 45–66 (2006)

Rodriguez, S., et al.: SmarTerp: a CAI system to support simultaneous interpreters in real-time. In: Proceedings of Triton 2021 (2021)

Eloy Rodríguez González

Eloy is a conference interpreter, a conference interpreting trainer and a PhD student at the University of Surrey whose research interests are remote simultaneous interpreting, the interpreter-machine interaction and the use of technology and AI in interpreter-mediated environments.